-

Recent Posts

-

Recent Comments

- Domas Mituzas on … in numbers

- btongminh on … in numbers

- Domas Mituzas on … in numbers

- Dalius (@daliusd) on … in numbers

- Mark Callaghan on InnoDB subsystems in color

Blowing up in memory

MySQL isn’t too concerned about table handler memory usage – it will allocate row size buffer thrice per each table invocation. There’s a few year old bug discussing UNION memory usage – for each mention in an union one can allocate nearly 200k of unaccounted memory – so a megabyte sized query can consume 7GB of RAM already.

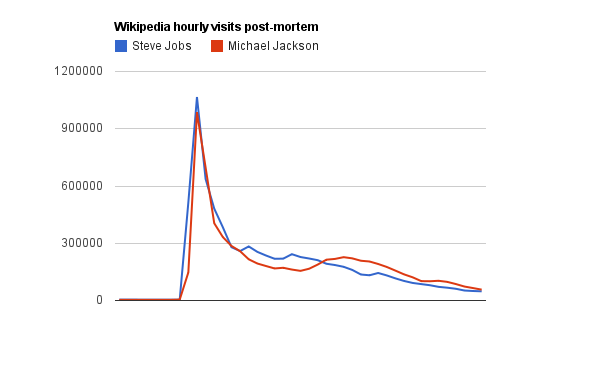

Partitioning though adds even more pain here – it will allocate those three buffers per each partition, so opening a table with 1000 partitions looks like this on memory profile:

Click to enlarge, and you will see 191MB sent to execute a simple single-row fetching query from a table (I filed a bug on this).

There’re multiple real life situations when this is painful (e.g. any kind of server stall may lead to multiple concurrent threads reading from same table, consuming additional gigabytes or tens of gigabytes of memory). It gets even more painful when combined with UNION bug – a megabyte query on an empty table can now consume 7TB of memory and I doubt anyone has that much on their MySQL servers :-)

P.S. Also, check out how much memory can be wasted for malloc overhead, once discussed here.

P.P.S. And here you can see why innodb_max_dirty_pages_pct=0 doesn’t do what you’d expect.

On connections

MySQL is needlessly slow at accepting new connections. People usually work around that by having various sorts of connection pools, but there’s always a scale at which connection pools are not feasible. Sometimes connection avalanches come unexpected, and even if MySQL would have no trouble dealing with queries, it will have problems letting clients in. Something has to be done about it.

Lots of these problems have been low hanging fruits for years – it ‘was not detected’ by benchmarks because everyone who benchmarks MySQL would know that persistent connections are much faster and therefore wouldn’t look at connection speeds anymore.

Usually people attribute most of slowness to the LOCK_thread_count mutex – they are only partially right. This mutex does not just handle the counter of active running connections, but pretty much every operation that deals with increase or decrease of threads (thread cache, active thread lists, etc) has to hold it for a while.

Also, it is common wisdom to use thread cache, but what people quite often miss is that thread cache is something that was created back when OS threads were extremely expensive to create, and all it does is caching pthreads. It does not do any of MySQL specific thread caching magic – everything gets completely reinitialized for each incoming structure.

I decided to attack this problem based on very simple hypothesis – whatever ‘accept thread’ is doing, is bottleneck for whole process. It is very simple to analyze everything from this perspective (and I had some success looking at replication threads from this perspective).

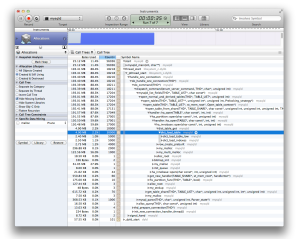

All we need is gdb and two loops – gdb attaches to accept thread, one loop does ‘breakpoint; continue’, another sends signals at a certain sampling rate (I picked 10Hz in order to avoid profiling bias). I posted those scripts on PMP page. After a lunch break I had 50k stacks (long lunch ;-) that I fed into graphviz for full data visualisation and could look at individually:

A picture is worth thousand words (well, is easier than looking at thousands of lines in stack aggregations), and I immediately noticed few things worth looking at:

- Initializing THD (MySQL thread) structure is CPU-heavy task that resides in choke-point thread

- There is way too much time spent in syscalls, whatever they do

- Too much memory allocation done by the master thread

- There’s mutex contention on thread cache waking up worker threads

- There’s needless mutex contention in few other places

I didn’t want to look at mutex contention issues first so I ended up with something as simple as looking at syscall costs.

- 15% was going into actual accept()

- 8.5% was going into poll()

- 8% went into fcntl()

- 7% went into setsockopt()

- 1.2% went into getsockname()

An strace on mysqld gives a picture that explains quite a bit:

poll([{fd=12, ...}, {fd=13, ...}], 2, -1) = 1

fcntl(12, F_GETFL) = 0x2 (flags O_RDWR)

fcntl(12, F_SETFL, O_RDWR|O_NONBLOCK) = 0

accept(12, {... sin_port=htons(59183), ...) = 32

fcntl(12, F_SETFL, O_RDWR)

getsockname(32, {... sin_port=htons(3306), ...) = 0

fcntl(32, F_SETFL, O_RDONLY) fcntl(32, F_GETFL) = 0x2 (flags O_RDWR)

setsockopt(32, SOL_SOCKET, SO_RCVTIMEO, ...)

setsockopt(32, SOL_SOCKET, SO_SNDTIMEO, ...)

fcntl(32, F_SETFL, O_RDWR|O_NONBLOCK)

setsockopt(32, SOL_IP, IP_TOS, [8], 4)

setsockopt(32, SOL_TCP, TCP_NODELAY, [1], 4)

I’ll skip walking through the code, but essentially what it does here is (12 is accept socket, 32 is connection socket):

- poll() checks whether there are pending connections. If server is busy, trying to accept first, poll on failure is a better approach. There are side effects with that idea though – other sockets may starve a bit, but it is solvable by injecting occasional poll.

- What happens next is a bit sad. Instead of storing per-socket flags (nobody is touching that for now anyway), it gets the socket flags, figures out it is a blocking socket, sets it to nonblocking mode, accepts the connection, sets it back to blocking mode. Just setting to nonblocking at the start and using it forever that way is much cheaper and constipates way less.

- accept() itself can be scaled only by having parallel accept() threads. Maybe most of this post would be not necessary if there were multiple accept threads, but I’m not eager to go into that kind of refactoring for now.

- getsockname() is used just to verify if socket is correct (probably catching EINVAL later seems to be too complicated), it is a very pessimistic code path for a case that nearly never happens (it probably was added for some random Unix back from nineties)

- Next fcntl “get flags” call is quite unnecessary – this is a fresh socket and one shouldn’t expect anything special within it. Later non-blocking mode is set, so that overrides whatever was obtained here.

- Three out of four setsockopt()s are necessary evil (one turns of Nagle’s algorithm, two other set socket timeouts), so they have to be done before network I/O is done on the socket. Fourth setsockopt() is usually completely useless – not every network observes IP_TOS header, and one has to talk to network administrator first about decent values. I’d say it can be optional parameter (yay, more tuning options).

Pretty much every connection socket operation can be done later, in a worker thread, without consuming expensive accept thread time, and pretty much every syscall except accept() can be removed from a busy accept thread(), which is what I did in my testing build.

Once I got rid of syscalls I started looking at other low hanging fruits. The most obvious one was sprintf() called inside vio_new(). Though it accounted only for 4% of thread time, the uselessness of it was depressing. Here it is:

sprintf(vio->desc, (vio->type == VIO_TYPE_SOCKET ? "socket (%d)" : "TCP/IP (%d)"), vio->sd);

It formats a string that is not used at all by production builds (only few DBUG messages are calling vio_description()). Though I removed this code in non-debug build, as I was moving over network initialization to worker threads, whole my_net_init() and vio() ended up outside of accept thread anyway ;-)

The overall thread cache design is centered around LOCK_thread_count – lock is held while signaling threads, and threads that wake up need the lock too – so there’s lots of overhead involved in the coordination – 13% of time is spent just to pass the task to a worker thread.

Allowing multiple threads to wake up and multiple entries to be placed into thread cache before it is all drained (more of an InnoDB concurrency-queue with FLIFO approach) could be somewhat better – so would be worker threads accepting connections directly (I already said that, I guess). There’s simply too much time wasted waking up and sending threads to sleep, and quite some of that time is on a choke point.

THD initializations are somewhat simpler, as they don’t include SMP madness.

There’re some low hanging fruits of course there as well. For example THD initializer calls sql_rnd_with_mutex(), which locks thread count mutex. Simplest fix could be using another mutex, though lockless random function or on-demand variable initialization would help too.

Some initializers there are quite expensive too – e.g. Warning_info class could initialize dynamic storage only when actually used, and not at THD initialization chokepoint. THD::init can be moved to a worker thread, and lots of THD initialization could be moved over to it.

Quite a lot of time (12%) is spent on malloc() – and lots of that is for allocating lots of various fixed-size structures – slab allocator (or just more efficient malloc implementation) could cut on CPU time there. Of course, more drastic alternative is not dealing with THD at all during accept phase – one can pass stub structure to build upon later, or (oh, am I writing this again) moving accept() part to individual workers.

So far I tested just few optimizations – moved over vio/net initialization to worker threads, reduced number of syscalls, added a new mutex for rand initialization, and that alone got me additional 50% increase in connection accepts. Think how much more one could get from fixing this problem properly ;-)

TL;DR: MySQL sucks at accepting new connections, but there’re lots of low hanging fruit there. Ask your MySQL provider for a fix.

MySQL bug entries:

InnoDB subsystems in color

I tried to put every subdirectory of InnoDB codebase into a chart that would explain some of relations between subsystems and modules inside the source. This is what I got (click to enlarge):

Update: Check Vadim’s diagram for a more operational view of InnoDB

Another update: There’s a vector PDF version

Stonebraker trapped in Stonebraker 'fate worse than death'

Oh well, I know I shouldn’t poke directly at people, but they deserve that sometimes (at least in my very personal opinion). Heck, I even gave 12h window for this not to be hot-headed opinion.

Those who followed MySQL at facebook development probably know how much we focus on actual performance on top of mixed-composition I/O devices (flashcache, etc) – not just retreating to comfortable zone of in-memory (or in-pure-flash) data.

I feel somewhat sad that I have to put this truism out here – disks are way more cost efficient, and if used properly can be used to facilitate way more long-term products, not just real time data. Think Wikipedia without history, think comments that disappear on old posts, together with old posts, think all 404s you hit on various articles you remember from the past and want to read.

Building the web that lasts is completely different task from what academia people imagine building the web is.

I already had this issue with other RDBMS pioneer (there’s something in common among top database luminaries) – he also suggested that disks are things of the past and now everything has to be in memory, because memory is cheap. And data can be whatever unordered clutter, because CPUs can sort it, because CPUs are cheap.

They probably missed Al Gore message. Throwing more and more hardware without fine tuning for actual operational efficiency requirements is wasteful and harms our planet. Yes, we do lots of in-memory efficiency work, so that we reduce our I/O, but at the same time we balance the workload so that I/O subsystem provides as efficient as possible delivery of the long tail.

What happens in real world if one gets 2x efficiency gain? Twice more data can be stored, twice more data intensive products can be launched.

What happens in academia of in-memory databases, if one gets 2x efficiency gain? A paper.

What happens when real world doesn’t read your papers anymore? You troll everyone via GigaOM.

Though sure, there’s some operational overhead in handling sharding and availability of MySQL deployments, at large scale it becomes somewhat constant cost, whereas operational efficiency gains are linear.

Update: Quite a few people pointed out that I was dissing a person who has done incredible amount of contributions, or that I’m anti-academia. I’m not, and I extremely value any work that people do wherever they are, albeit I do apply critical thinking to whatever they speak.

In my text above (I don’t want to edit and hide what I said) I don’t mean that “a paper” is useless. Me and my colleagues do read papers and try to understand the direction of computer science and how it applies to our work (there are indeed various problems yet to solve). I’d love to come up with something worth a paper (and quite a few of my colleagues did).

Still, if someone does not find that direction useful, there’s no way to portray them the way the original GigaOM article did.

InnoDB locking makes me sad

Vadim and others have pointed at the index->lock problems before, but I think they didn’t good job enough at pointing out how bad it can get (the actual problematic was hidden somewhere as some odd edge case). What ‘index lock’ means is generally the fact that InnoDB has table-level locking which will kill performance on big tables miserably.

InnoDB is a huge pie of layers, that have various locking behaviors, and are layered on top of each other, and are structured nicely as subdirectories in your innodb_plugin directory. Low level storage interfaces are done via os/ routines, then on top of that there’s some file space manager, fsp/, which allocates space for btr/ to live in, where individual page/ entities live, with multiple row/ pieces. There’re few other subsystems around, that got quite some attention lately – e.g. buf/ pool, transaction log/, and large trx/ transactions are composed of micro transactions living in mtr/.

If you live in memory, you care about buffer pool and transaction log performance, if you write insane amounts of data to in-memory buffers you hit mtr/ problems and depend o how fast you can write out log/ or flush out buf/. If you are in I/O-heavy land most of stuff you care about happens in btr/.

Generally InnoDB is quite good about read scalability in I/O bound environments – nowadays one can saturate really fast I/O devices and there will be plenty of parallel reads done. Major scalability problem in this field was read-ahead which was funneling all read-ahead activity into a small set of threads, but other than that there can be hundreds of parallel reads issued to underlying devices. Situation changes when writes are added to the mix, though again, there’re few different scenarios.

There’re two ways for InnoDB to write out updates to pages, “optimistic” and “pessimistic”. Optimism here means that only in-page (page/row) operation will be needed without changing the tree structure. In one case you can expect quite high parallelism – multiple pages can be read for that operation at a time, multiple of them can be edited at a time, then some serialization will happen while writing out changes to redo log and undo segments. Expect good performance.

The much worse case is when B-Tree is supposed to be reorganized and multiple page operations can happen; thats pessimism. In this case whole index gets locked (via a read-write lock obtained from dict/),

then B-Tree path is latched, then changes are done, then it is all unlocked until next row operation needs to hit the tree. Unfortunately, both ‘path is latched’ and ‘changes are done’ are expensive operations, and not only in-core, but are doing sync page read-ins, one at a time, which on busy systems serving lots of read load are supposed to be slow. Ironically, as no other operations can happen on the table at that time, you may find out you have spare I/O capacity.. ;-)

What gets quite interesting though is the actual operation needed to latch b-tree path. Usual wisdom would say that if you want to change a row (read-modify-write), you probably looked up the page already, so there won’t be I/O. Unfortunately, InnoDB uses an slightly more complicated binary tree version, where pages have links to neighbors, and tree latching does this (a bit simplified for reading clarity):

/* x-latch also brothers from left to right */

get_block = btr_block_get(space, zip_size, left_page_no, RW_X_LATCH, mtr);

get_block = btr_block_get(space, zip_size, page_no, RW_X_LATCH, mtr);

get_block = btr_block_get(space, zip_size, right_page_no, RW_X_LATCH, mtr);

So, essentially in this case, just because InnoDB is being pessimistic, it reads neighboring blocks to lock them, even if they may not be touched/accessed in any way – and bloats buffer pool at that time with tripple reads. It doesn’t cost much if whole tree fits in memory, but it is doing three I/Os in here, if we’re pessimistic about InnoDB being pessimistic (and I am). So, this isn’t just locking problem – it is also resource consumption problem at this stage.

Now, as the dictionary lock is hold in write mode, not only updates to this table stop, but reads too – think MyISAM kind of stop. Of course, this ‘table locking’ happens at entirely different layer than MyISAM. In MyISAM it is statement-length locking whereas in InnoDB this lock is held just for row operation on single index, but if statement is doing multiple row operations it can be acquired multiple times.

Probably there exist decent workarounds if anyone wants to tackle this – grabbing read locks on the tree while reading pages into buffer pool, then escalating lock to exclusive. A bit bigger architectural change would be allowing to grab locks on neighbors (if they are needed) without bringing in page data into memory – but that needs InnoDB overlords to look at it. Talk to your closest MySQL vendor and ask for a fix!

How do regular workloads hit this? Larger your records are, more likely you are to have tree changes, lower your performance will be. In my edge case I was inserting 7k sized rows – even though my machine had multiple disks, once the dataset fell out of buffer pool, it couldn’t insert more than 50 rows a second, even though there were many disks idle and capacity gods cried. It gets worse with out-of-page blobs – then every operation is pessimistic.

Of course, there’re ways to work around this – usually by taking the hit of sharding/partitioning (this is where common wisdom of “large tables need to be partitioned” mostly comes from). Then, like with MyISAM, one will have multiple table locks and there may be some scalability then.

TL;DR: InnoDB index lock is major architectural performance flaw, and that is why you hear that large tables are slower. There’s a big chance that there’re more scalable engines for on-disk writes out there, and all the large InnoDB write/insert benchmarks were severely hit by this.

MySQL metrics for read workloads

There are multiple metrics that are really useful for read workload analysis, that should all be tracked and looked at in performance-critical environments.

The most commonly used is of course Questions (or ‘Queries’, ‘COM_Select’) – this is probably primary finger-pointing metric that can be used in communication with different departments (“why did your qps go up by 30%?”) – it doesn’t always reveal actual cost, it can be increase of actual request rates, it can be new feature, it can be fat fingers error somewhere in the code or improperly handled cache failure.

Another important to note is Connections – MySQL’s costly bottleneck. Though most of users won’t be approaching ~10k/s area – at that point connection pooling starts actually making sense – it is worth to check for other reasons, such as “maybe we connect when we shouldn’t”, or needlessly reconnect, or actually should start looking more at thread cache performance or pooling options. There’re some neighboring metrics like ‘Bytes_sent’ – make sure you don’t hit 120MB/s on a gigabit network :-)

Other metrics usually are way more about what actually gets done. Major query efficiency signal for me for a long time used to be Innodb_rows_read. It is immediately pointing out when there are queries which don’t use indexes properly or are reading too much data. Gets a bit confusing if logical backup is running, but backup windows aside, this metric is probably one that is easy enough to track and understand. It has been extremely helpful to detect query plans gone wrong too – quite a few interesting edge cases could be resolved with FORCE INDEX (thats a topic for another post already :-)

For I/O heavy environments there’re few metrics that show mostly the same – Innodb_buffer_pool_reads, Innodb_data_reads, Innodb_pages_read – they all show how much your requests hit underlying storage – and higher increases ask for better data locality, more in-memory efficiency (smaller object sizes!) or simply more RAM/IO capacity.

For a long time lots of my metrics-oriented performance optimization could be summed up in this very simple ruleset:

- Number of rows shown to user in the UI has to be as close as possible to rows read from the index/table

- Number of physical I/Os done to serve rows has to be as close to 0 as possible :-)

Something I like to look at is the I/O queue size (both via iostat and from InnoDB’s point of view) – Innodb_data_pending_reads can tell how loaded your underlying storage is – on rotating media you can allow multiples of your disk count, on flash it can already mean something is odd. Do note, innodb_thread_concurrency can be a limiting factor here.

Overloads can be also detected from Threads_running – which is easy enough to track and extremely important quality of service data.

An interesting metric, that lately became more and more important for me is Innodb_buffer_pool_read_requests. Though it is often to use buffer pool efficiency in the ratio with ‘buffer pool reads’, it is actually much more interesting if compared against ‘Innodb_rows_read’. While Innodb_rows_read and Handler* metrics essentially show what has been delivered by InnoDB to upper SQL layer, there are certain expensive operations that are not accounted for, like index estimations.

Though tracking this activity helps I/O quite a bit (right FORCE INDEX reduces the amount of data that has to be cached in memory), there can be also various edge cases that will heavily hit CPU itself. A rough example could be:

SELECT * FROM table WHERE parent_id=X and type IN (1,2,4,6,8,…,20) LIMIT 10;

If there was an index on (parent_id,type) this query would look efficient, but would actually do range estimations for each type in the query, even if they would not be fetched anymore. It gets worse if there’s separate (type) index – each time query would be executed, records-in-rage estimation would be done for each type in IN() list – and usually discarded, as going after id/type lookup is much more efficient.

By looking at Innodb_buffer_pool_read_requests we could identify optimizer inefficiency cases like this – and FORCE INDEX made certain queries 30x faster, even if we forced exactly same indexes. Unfortunately, there is no per-session or per-query metric that would do same – it could be extremely useful in sample based profiling analysis.

Innodb_buffer_pool_read_requests:Innodb_rows_read ratio can vary due to multiple reasons – adaptive hash efficiency, deeper B-Trees because of wide keys (each tree node access will count in), etc – so there’s no constant baseline everyone should adjust to.

I deliberately left out query cache (here’s the reason), or adaptive hash (I don’t fully understand performance implications there :). In mysql@facebook builds we have some additional extremely useful instrumentation – wall clock seconds per various server operation types – execution, I/O, parsing, optimization, etc.

Of course, some people may point out that I’m writing here from a stone age, and that nowadays performance schema should be used. Maybe there will be more accurate ways to dissect workload costs, but nowadays one can spend few minutes looking at metrics mentioned above and have a decent understanding what the system is or should be doing.

On database write workload profiling

I always have difficulties with complex analysis schemes, so fall back to something that is somewhat easier. Or much easier. Here I will explain the super-powerful method of database write workload analysis.

Doing any analysis on master servers is already too complicated, as instead of analyzing write costs one can be too obsessed with locking and there’s sometimes uncontrollable amount of workload hitting the server beside writes. Fortunately, slaves are much better targets, not only because writes there are single-threaded, thus exposing every costly I/O as time component, but also one can drain traffic from slaves, or send more in order to cause more natural workload.

Also, there can be multiple states of slave load:

- Healthy, always at 0-1s lag, write statements are always immediate

- Spiky, usually at 0s lag, but has jumps due to sometimes occuring slow statements

- Lagging, because of read load stealing I/O capacity

- Lagging (or not catching up fast enough), because it can’t keep up with writes anymore, even with no read load

Each of these states are interesting by themselves, and may have slightly different properties, but pretty much all of them are quite easy to look at using replication profiling.

The code for it is somewhat straightforward:

(while true; do

echo 'SELECT info FROM information_schema.processlist

WHERE db IS NOT NULL AND user="system user"; '

sleep 0.1; done) | mysql -BN | head -n 100000 > replication-sample

There are multiple ways to analyze it, e.g. finding slowest statements is as easy as:

uniq -c replication-sample | sort -nr | head

More advanced methods may group up statements by statement types, tables, user IDs or any other random metadata embedded in query comments – and really lots of value can be obtained by doing ad-hoc analysis using simply ‘grep -c keyword replication-sample’ – to understand what share of your workload certain feature has.

I already mentioned, that there are different shapes of slave performance, and it is easy to test it in different shapes. One of methods is actually stopping a slave for a day, then running the sampler while it is trying to catch up. It will probably have much more buffer pool space usable for write operations, so keep that in mind – certain operations that are depending on larger buffer pools would be much faster.

This is really simple, although remarkably powerful method, that allows quite deep workload analysis without spending too much time on statistics features. As there’s no EXPLAIN for UPDATE or DELETE statements, longer, coarser samples allow detecting deviations from good query plans too.

Systematic use of it has allowed to reveal quite a few important issues that had to be fixed – which were not that obvious from general statistics view. I like.

5.6!

As extremely happy user of crash-safe-slave functionality since 4.0, I hereby welcome this feature in upcoming 5.6 release!

5.6 seems to be strongest production-support release since introduction of InnoDB, solving issues of long running high performance systems, that were forced to use Percona/M@FB/GooglePatch/.. before. Good!

A case for FORCE INDEX

I remember various discussions in different mediums where people were building cases against use of FORCE INDEX in SQL queries. I’ll hereby suggest it using way more often, but at first I’ll start with small explanation.

For ages, the concept of index statistics affecting query plans has been clogging minds of DBAs, supported by long explanations of MyISAM and InnoDB manuals. Actually, statistics are used just for determining which index to use for a joined table, as predicate is not known at the time of ‘optimization’.

What happens if you do a simple query like:

SELECT * FROM table WHERE a=5 AND b=6

? If there’s an index that enforces uniqueness on (a,b), it will be used – this is short-path for PRIMARY KEY lookups. Otherwise, it will go to any index, composite or not, that can satisfy either a or b (or both), and evaluate how many rows it will fetch from it using the provided criteria.

Now, contrary to what people usually think, the row count evaluation has nothing really much to do with cardinality statistics – instead it builds the range that the known predicate can check on existing index, and does two full B-Tree dives to the index – one at the start of the range, and one at the end of it. For each possible index.

This simply means that even if you are not using the index to execute query, two leaf pages (and all the tree branches to reach them) will end up being fetched from disk into the cache – wasting both I/O cycles and memory.

There’s also quite interesting paradox at this – in some cases, more similar other indexes are, more waste they create because of rows-in-range checks. If a table has indexes on (a,b,c) and (a,b,d), query for (a,b,d) will be best satisfied by (a,b,d) index, but will evaluate range sizes for (a,b). If the first index were (a,c,b), it would be only able to check head and tail of (a) – so way less B-Tree positions would be cached in memory for the check. This makes better indexing sometimes fare worse than what they’re worth in benchmarks (assuming that people do I/O-heavy benchmarking :)

The easy way out is using FORCE INDEX. It will not do the index evaluation – and no B-Tree dives on unneeded index.

In my edge case testing with real data and skewed access pattern hitting a second index during ‘statistics’ phase has increased execution time by 70%, number of I/Os done by 75%, number of entrances into buffer pool by 31% and bloated buffer pool with data I didn’t need for read workload.

For some queries like “newest 10 entries” this will actually waste some space preheating blocks from the other end of the range that will never be shown – there will definitely be a B-Tree leaf page in buffer pool with edits from few years ago because of RIR. Unfortunately, the only MySQL-side solution for this is HANDLER interface (or probably HandlerSocket) – but it doesn’t make using FORCE INDEX not worth it – it just pushes towards making FORCE INDEX be much more forceful.

So, use the FORCE, Luke :)